low FPS because of bad GPU Usage?

Helikaon Germany Join Date: 2016-07-07 Member: 219775Members

Germany Join Date: 2016-07-07 Member: 219775Members

Hello Community,

I first played Subnautica with recommended graphic settings but after the game crashed several times I changed to minimal settings and it worked. But with the time my frame rate began to drop and now I often have only 2-10 FPS and I can't play the game anymore. The big problem is that my PC totally meets the minimal system requirements. I also have some friends with much worse systems who can play subnautica without any frame rate problems. Therefore I launched my GPU Tweak program to see what may be wrong and I saw that my GPU usage varies widely between 0 and 100%. Normally it should always stay at 100%.

I started some other games and found out that I only have this problem in Subnautica. My drivers are all up to date. Maybe someone of you has the same problem and/or knows a solution or has an idea

greetings

Helikaon

my system:

CPU: Intel Core i5 4460 4x 3.20GHz

GPU: 4096MB Asus Radeon R9 380 Strix Gaming Direct CU II

RAM: 8GB Crucial Ballistix Sport DDR3-1600

Mainboard: ASRock B85M Pro3 Intel B85 So.1150 Dual Channel DDR mATX Retail

currently I don't use a SSD

results in numbers:

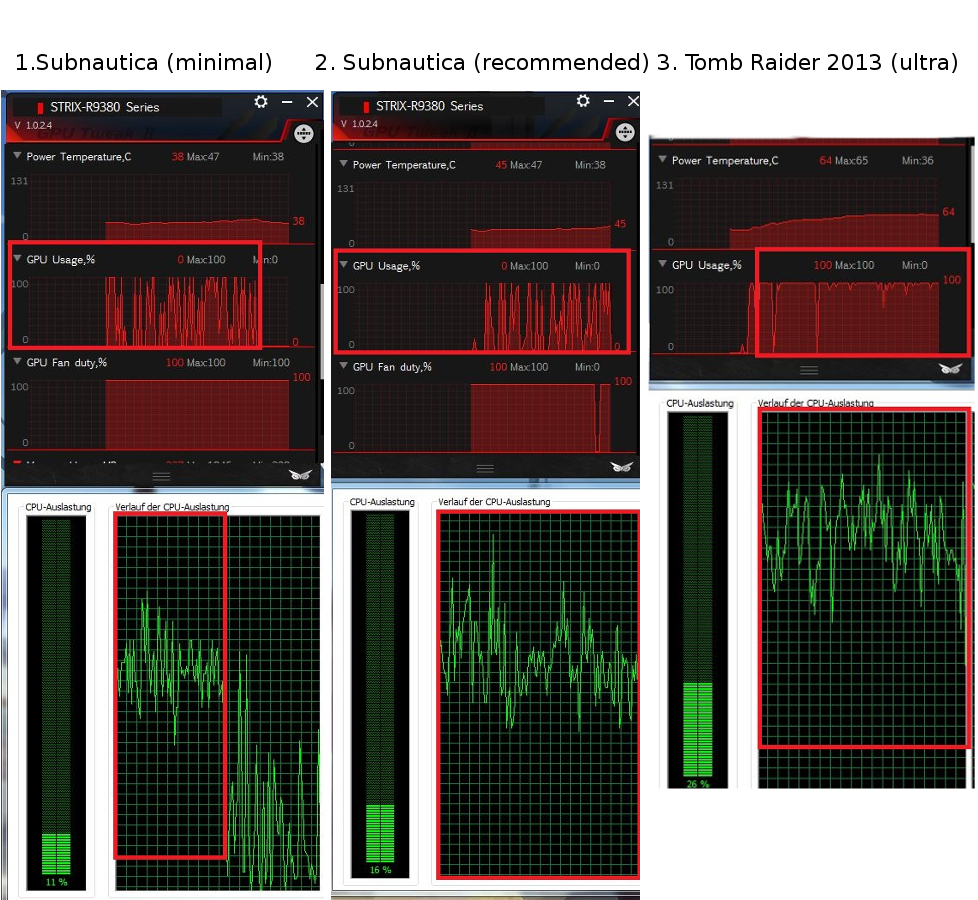

Subnautica (recommended and minimal settings) CPU: 55-75%; GPU: 0-100%; FPS: 2-35; temperature: 40-45°C

Tomb Raider 2013 (ultra): CPU: 65-90%; GPU: 90-100%; FPS: 35-55; temperature: 40-65°C

the results recorded with the task manager and my GPU Tweak program:

Note: the green curve shows the CPU usage and the red the GPU usage. The red edging shows the time I was actually ingame. I had the fans at 100% so the temperature remained low to exclude inaccurate results because of heat.

I first played Subnautica with recommended graphic settings but after the game crashed several times I changed to minimal settings and it worked. But with the time my frame rate began to drop and now I often have only 2-10 FPS and I can't play the game anymore. The big problem is that my PC totally meets the minimal system requirements. I also have some friends with much worse systems who can play subnautica without any frame rate problems. Therefore I launched my GPU Tweak program to see what may be wrong and I saw that my GPU usage varies widely between 0 and 100%. Normally it should always stay at 100%.

I started some other games and found out that I only have this problem in Subnautica. My drivers are all up to date. Maybe someone of you has the same problem and/or knows a solution or has an idea

greetings

Helikaon

my system:

CPU: Intel Core i5 4460 4x 3.20GHz

GPU: 4096MB Asus Radeon R9 380 Strix Gaming Direct CU II

RAM: 8GB Crucial Ballistix Sport DDR3-1600

Mainboard: ASRock B85M Pro3 Intel B85 So.1150 Dual Channel DDR mATX Retail

currently I don't use a SSD

results in numbers:

Subnautica (recommended and minimal settings) CPU: 55-75%; GPU: 0-100%; FPS: 2-35; temperature: 40-45°C

Tomb Raider 2013 (ultra): CPU: 65-90%; GPU: 90-100%; FPS: 35-55; temperature: 40-65°C

the results recorded with the task manager and my GPU Tweak program:

Note: the green curve shows the CPU usage and the red the GPU usage. The red edging shows the time I was actually ingame. I had the fans at 100% so the temperature remained low to exclude inaccurate results because of heat.

Comments

My specs:

CPU: Intel Core i7 4770K

GPU: GTX 760-Ti

RAM: 16 GB corsair XM3 ddr3-1600

I have the game installed on a samsung EVO SSD

my cpu load is under 2%

No idea what my GPU load is, I'll check next time

its chewing through my ram though, takes up between 5-8 GB,

The FPS I am getting on minimum settings is unplayable.

the game started out perfect but as I explored and built bases everything bogged down

there's a huge performance drain somewhere.

The PC I'm using is very similar to OP's. R9 255 with 8 gig RAM. Yet for me the game runs as well as you can expect on recommended settings.

I was playing on max settings but the RAM bloated so much it was unplayable after 10 or so minutes. On recommended I can play for hours with no issue.

The cyclops causes me massive frame rate drop, however. The game is not optimised well yet.

Also, don't screw around with the terrain because that seems to make a big difference in my experience. The more digging, the worse it gets.

first gen FX 8320 OC'd at 4.2 Ghz

8GB 1600 mhz RAM

1 SSD + 1 HDD had the game on both and there was no diference in performance asides from the initial map loading taking about 3 minutes longer on my HDD

R9 280x GPU

990FXA-UD3 mobo

My guess would simply be that they need a more efficient way to store positions of things. A snap-to system for indoor item placement would be the first place I'd start. Ladders have fixed placement points, as do giant aquariums - why not do this for everything? It would probably take less memory for the game to remember presorted slots than trying to remember exact X/Y/Z positions of every single sign, locker and flowerpot down to the exact pixel. Plus it would simply look more organized by default.

Memory loading in generalyl is kind of sluggish overall, too. In a Seamoth I can easily outrun the game's own ability to render terrain, turning the entire map in sandy plains until I decide to slow down and let the loading catch up with where I am. That might just be my own system at fault, however, as this can happen even when I first start.

But yes, there is a definite rapid decay in performance as things are added. By the time I've finished even one small base (5 rooms, tops) I'll probably already have lost half my FPS. What makes it so confounding is that performance loss remains even when I'm all the way on the literal edge of the map, staring at the endless drop which is the map edge. Not so much as single piece of flora within visual range and yet the entire game is still running as if I was staring at a three story aquarium inside a base the size of the Taj Mahal.

And that screams "MEMORY LEAK" like nothing else. My guess is that the game is honestly trying to keep literally every single player placed item loaded into RAM at all times, regardless of whether or not you're actually looking at it or even if you're within a thousand meters of it. It would be far more efficient to rewrite the entire engine to:

1) Duplicate the core map files into the savegame folder. It may already be doing this but,

2) Write player placed objects and terraforming changes directly to this duplicate file on the hard drive and then

3) Dump all that crap from RAM when not in use.

4) Buffer parameters to only save around once every 5 minutes or 256mb of changes so long as the Builder tool is equipped to avoid needless HD fatigue.

Note: I don't mean do the Bethesda thing where you make a "main" and then let player actions "mod" things in some sort of convoluted priority tree. No, I mean dupe the map file entirely and then change that dupe file whenever the player does something. That way you're not loading layers of crap for no good reason. Just one file with all the data. It may be a bit slower than the clean-slate fresh start of the game feeling, but it won't get all bogged down so easily later on.